In the rush to adopt Generative AI, the corporate world has hit a wall. It is invisible to the naked eye but painfully obvious on the balance sheet. It is the Implementation Gap. Recent data presented at the Artificial Intelligence Innovation Summit (Nov 2025) unveiled a stark reality: While 88% of organizations are utilizing deterministic AI in some capacity, only 7% have successfully deployed Generative AI at scale.

The Mathematics of Failure

The industry is currently facing a projected 85% failure rate for AI projects moving forward. Why is the failure rate so high when the technology is so powerful? The answer lies in a fundamental misunderstanding of what AI implementation actually requires.

For the last two years, the conversation has been dominated by Large Language Models (LLMs), context windows, and parameter counts. Organizations have rushed to build the perfect "Tech Stack." However, as highlighted by industry experts like Izabela Lundberg, you can re-engineer a process in a week, but you cannot re-engineer a human mindset in the same timeframe.

This disconnect between technological capability and human readiness is the root cause of the 85% Cliff. Companies invest millions in AI infrastructure, only to watch their employees revert to manual processes within weeks of deployment. The technology works perfectly in demos, but fails spectacularly in the messy reality of daily operations.

The Trap of "Tech-First" Architecture

The "Tech-First" approach treats AI as a software update—a plug-and-play solution to efficiency problems. This approach fails because AI is not a calculator; it is a collaborator. When you introduce a collaborator into a workspace without establishing trust, defining roles, or addressing fears, the team rejects it.

Babar from IBM highlighted this specifically in his session on workflow transformation. When organizations rush to deploy GenAI without human governance, they create a "compounding error machine." The 85% failure rate isn't usually a total system crash; it is a "trust crash." Employees try the tool, it hallucinates once, and they abandon it forever to return to manual methods.

Consider a typical scenario: A hospital deploys an AI system to generate patient discharge summaries. The AI produces excellent results 95% of the time. But the 5% of cases where it hallucinates a medication dosage or misses a critical allergy creates a catastrophic loss of trust. Nurses stop using the system entirely, not because it's mostly wrong, but because they can't afford to be wrong even once.

The Governance Solution: AI-in-the-Loop (AIITL)

To survive the "AI Cliff," companies must shift their focus from Technology Architecture to Business Value Architecture. But more importantly, they must redefine the relationship between the human and the machine.

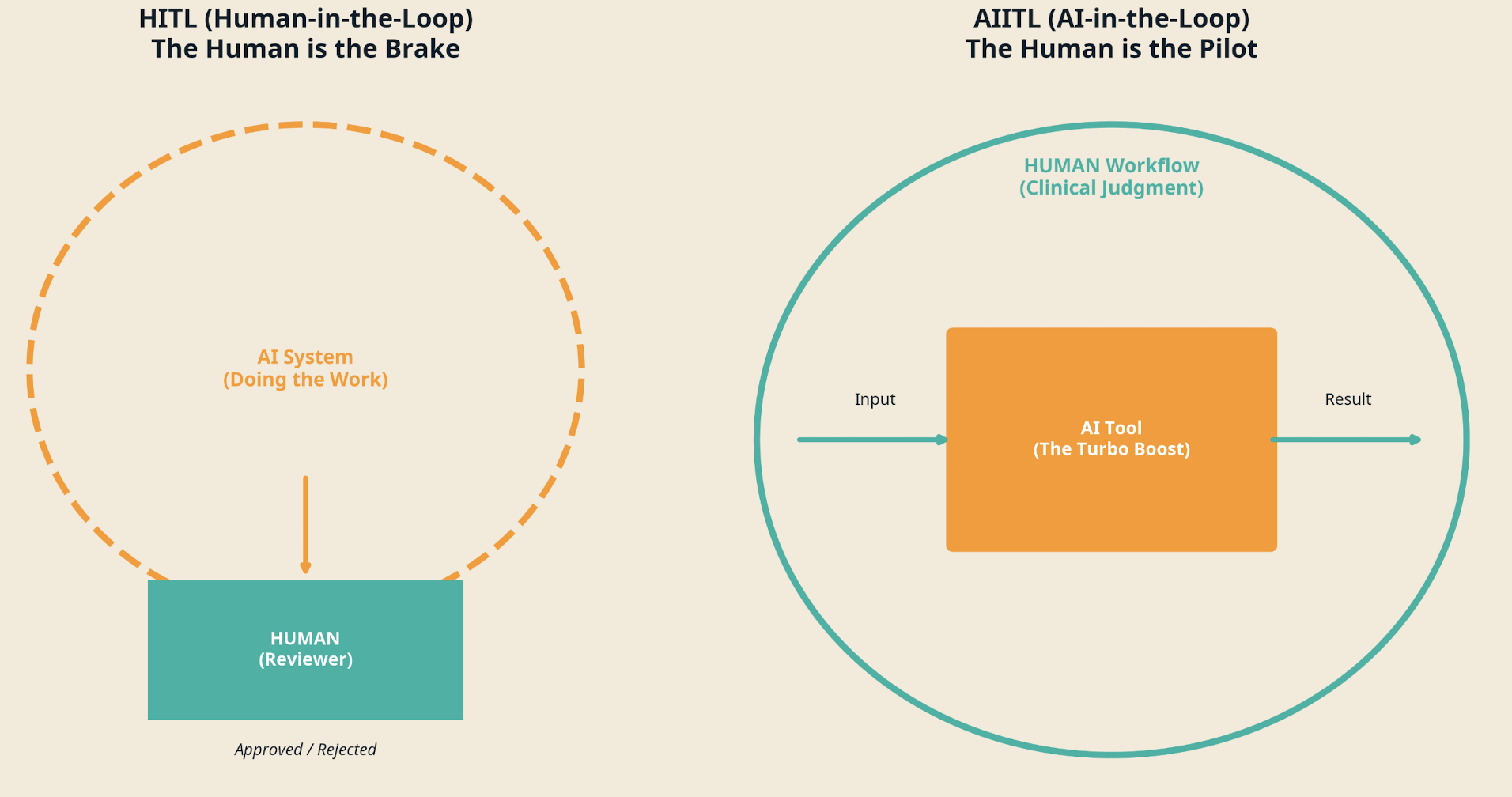

Stop Being the Passenger (HITL)

The industry standard has long been Human-in-the-Loop (HITL). In this outdated model, the AI acts as the car, and the human sits in the passenger seat with the emergency brake, terrified of the next error.

- HITL Dynamic: The AI does the work. The Human checks for errors.

- The Result: The human becomes a glorious spellchecker, leading to boredom, fatigue, and eventually, missed errors.

- The Problem: This model treats humans as quality control, not as strategic thinkers. It's demoralizing and ineffective.

Start Being the Pilot (AIITL)

To succeed in 2026, we must flip the script to AI-in-the-Loop (AIITL).

- AIITL Dynamic: The Human drives the car (strategy/empathy). The AI acts as the GPS and mechanic (data/suggestions).

- The Result: The human is empowered to work at the "Top of License," using AI to augment their capabilities rather than replace their judgment.

- The Advantage: This model keeps humans engaged, creative, and accountable. They're not just checking boxes; they're making strategic decisions with AI-powered insights.

The Governance Formula

If the technology is ready but the people are not, the solution is Human-Centric Governance. Izabela Lundberg proposed a specific formula for this transition:

Success = Trust + Learning + Collaboration

Trust involves addressing the fears of job replacement and the risks of hallucination. Organizations must be transparent about AI's capabilities and limitations. They must create psychological safety where employees can experiment without fear of punishment for AI errors.

Learning means moving beyond "prompt engineering" to "critical thinking." The most valuable skill in 2026 won't be writing the perfect prompt; it will be knowing when to trust the AI's output and when to challenge it. This requires training employees to think like auditors, not just operators.

Collaboration requires viewing AI as a partner in the workflow, not a replacement for it. This means redesigning processes so that humans and AI work together, each contributing their unique strengths. The human provides context, empathy, and strategic judgment. The AI provides speed, scale, and analytical power.

Real-World Application: Healthcare

In healthcare, the AIITL model is particularly powerful. Consider a clinical decision support system. In a HITL model, the AI generates a treatment recommendation, and the doctor simply approves or rejects it. This creates liability concerns and erodes the doctor's expertise.

In an AIITL model, the doctor interviews the patient, uses their clinical judgment to formulate a treatment strategy, and then asks the AI to surface relevant research, analyze drug interactions, and identify potential complications. The doctor remains the pilot, but the AI dramatically amplifies their capabilities.

This approach doesn't just improve outcomes; it improves job satisfaction. Doctors report feeling more empowered, not replaced. They're able to spend more time on the human aspects of care—empathy, communication, strategic thinking—while the AI handles the data-intensive grunt work.

Conclusion: The Human Stack

We are entering an era where the competitive advantage will not belong to those with the most powerful AI, but to those with the most adaptable humans. The high failure rate of AI projects is a signal that we have over-indexed on silicon and under-indexed on psychology. To scale AI successfully, we must build the "human stack" as robustly as we build the tech stack.

The 85% Cliff is not a technology problem. It's a people problem. And the solution is not better AI; it's better governance. By shifting from a tech-first to a human-first mindset, by embracing the AIITL framework, and by focusing on trust, learning, and collaboration, organizations can cross the chasm and join the 15% that succeed.

The AI revolution is not about replacing humans. It's about empowering them. The companies that understand this will be the ones that thrive in the age of AI.

References

- Artificial Intelligence Innovators Network (2025) Webinar: AI, ChatGPT, Gemini and Copilot Innovation Summit. 20 November. [Available at: https://andysquire.ai/latest-insights] (Accessed: 21 November 2025).

- Lundsberg, I. (2025) 'Human Governance and Ethical Oversight', presented at Artificial Intelligence Innovation Summit, 20 November.

- Smilingyte, L. (2025) 'AI Strategies for Enterprise Success: From Adoption to Value', presented at Artificial Intelligence Innovation Summit, 20 November.

- Bhatti, B. (2025) 'Transforming Workflows with IBM's AI Stack', presented at Artificial Intelligence Innovation Summit, 20 November.

- Squire, A. (2025) The New Governance (AIITL: The Pilot vs HITL: The Passenger). Available at: https://andysquire.ai (Accessed: 21 November 2025).