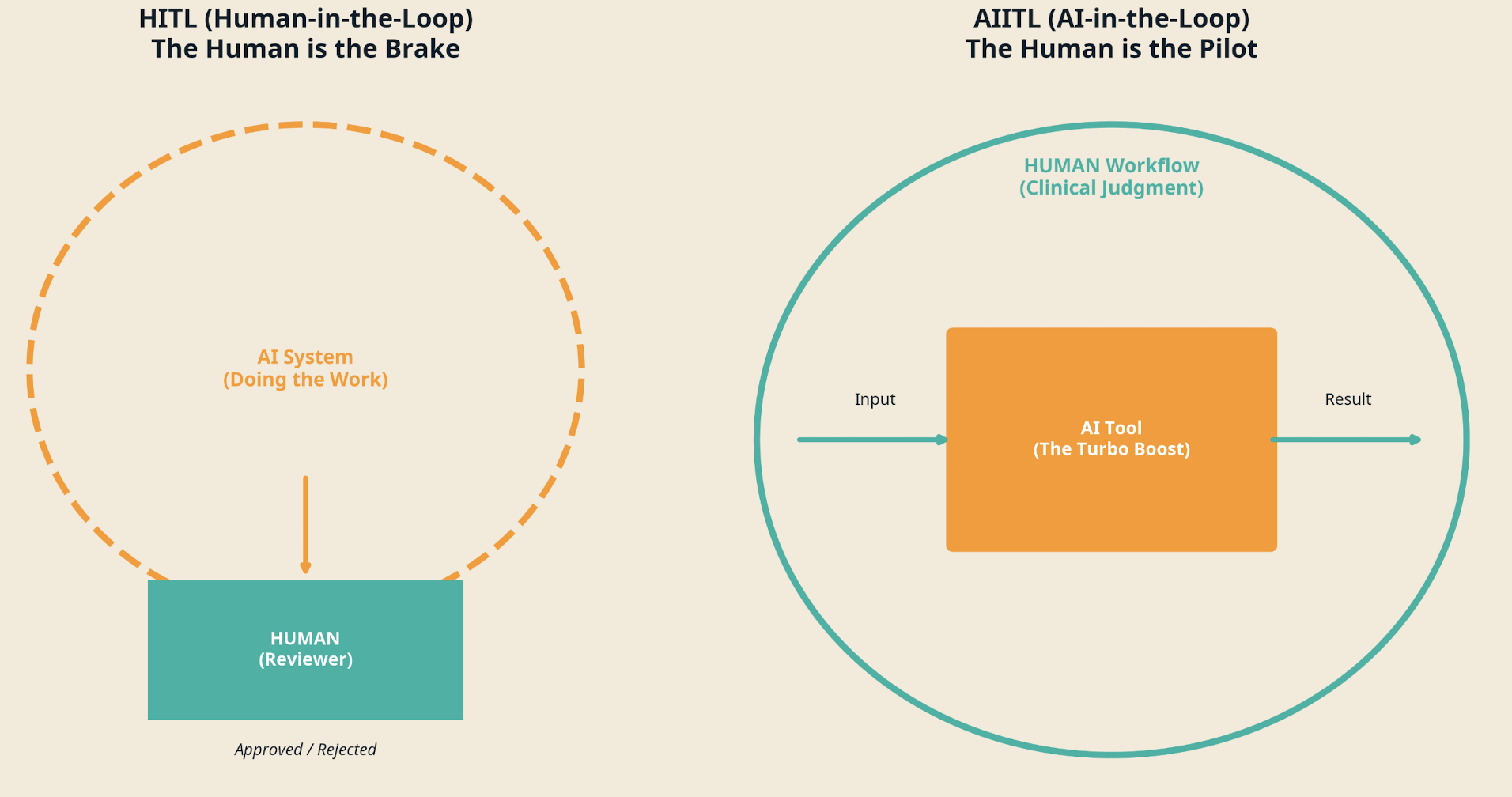

For the past decade, "Human-in-the-Loop" (HITL) has been the gold standard for AI safety. It sounds comforting: let the AI do the work, but keep a human nearby to hit the emergency brake if things go wrong. We are now discovering that this model is fundamentally flawed.

As AI models move from deterministic tools to generative agents, the HITL model is creating a dangerous paradox known in aviation psychology as "Vigilance Decrement." The safer we make the AI, the less attention the human pays to it, and the more catastrophic the eventual failure becomes.

At AndySquire.ai, we are advocating for a structural shift in enterprise governance: Moving from Human-in-the-Loop (HITL) to AI-in-the-Loop (AIITL). This is not just a semantic change; it is the difference between a human acting as a passive passenger and a human acting as an active pilot.

The "Rubber Stamp" Danger of HITL

To understand why the old model is failing, we must look at the workflow of a traditional HITL system.

- Step 1: The AI analyzes data.

- Step 2: The AI generates a conclusion or draft.

- Step 3: The Human reviews it.

- Step 4: The Human approves it.

In theory, Step 3 is the safety layer. In practice, it is the point of failure. Cognitive science tells us that humans are terrible monitors of highly reliable systems. When an AI is right 95% of the time, the human brain conditions itself to expect success. The reviewer scans the document, their eyes glaze over, and they "rubber stamp" the output.

We call this Cognitive Atrophy. When the human is placed after the work is done, they are not thinking; they are merely auditing. Over time, their own skills degrade, and they lose the context required to spot the subtle hallucinations that GenAI is famous for producing.

"The 'Safety Paradox' of HITL: The better the AI gets, the worse the human becomes at supervising it."

The Solution: AI-in-the-Loop (AIITL)

AI-in-the-Loop (AIITL) inverts the workflow. It restores "Commander’s Intent" to the process. In this model, the human is not the safety net; the human is the architect.

How AIITL Workflows Function

- Step 1 (Human Intent): The Human defines the strategy, the diagnosis, or the creative vision.

- Step 2 (AI Augmentation): The Human calls upon the AI to fetch data, run simulations, or critique the strategy.

- Step 3 (Synthesis): The Human integrates the AI's input into their decision.

- Step 4 (Execution): The Human executes the final action.

In this model, the human brain never goes "offline." Because the human initiates the action, they maintain Situational Awareness. The AI is not driving the car; the AI is the GPS, offering routes that the human driver can choose to accept or ignore.

Why AIITL is Safer: The Evidence

Safety isn't just about preventing errors; it's about accountability and recovery. Here is why the AIITL framework is statistically and ethically superior for modern enterprise.

1. Prevention of Automation Bias

"Automation Bias" is the tendency for humans to favor suggestions from automated decision-making systems over contradictory information made without automation. In a HITL model, the AI speaks first, anchoring the human to its bias. In an AIITL model, the human formulates a hypothesis before consulting the AI, reducing the risk of being swayed by a confident but hallucinating model.

2. Liability and Accountability

From a governance perspective, HITL is a legal minefield. If an AI makes a mistake and a human rubber-stamps it, who is to blame? The exhausted human or the "black box" algorithm?

In an AIITL framework, accountability remains firmly with the human. Because the human is the "Pilot," utilizing the AI as a tool, the chain of command is unbroken. This aligns with emerging regulatory frameworks, including the EU AI Act, which emphasizes human oversight—not just as a passive viewer, but as an active controller.

3. Preservation of Human Expertise

If we rely on HITL, junior employees will never learn. They will spend their careers approving AI outputs without understanding the first principles of the work. They will become "Paperclip Maximizers."

AIITL demands that the human remains the expert. To ask the AI the right questions, you must understand the subject matter. This governance model ensures that your workforce continues to upskill, using AI to go deeper (as discussed in our Summit 2025 analysis) rather than getting shallower.

Implementing AIITL: The "Pilot's Checklist"

Moving from HITL to AIITL requires a culture shift. It means training your team to stop asking, "Is this AI output correct?" and start asking, "How can I use AI to verify my output?"

For Healthcare: Don't use AI to diagnose the patient and have the doctor sign off. Let the doctor diagnose the patient, and use AI to check for drug interactions or rare disease correlations the doctor might have missed.

For Finance: Don't use AI to write the investment strategy. Let the analyst write the strategy, and use AI to stress-test it against 50 years of market crashes.

Conclusion

We cannot automate accountability. The "Human-in-the-Loop" model was a necessary bridge during the early days of AI, but in the era of generative agents, it is becoming a liability.

Real safety comes from engagement, not monitoring. By adopting the AI-in-the-Loop governance structure, organizations can harness the infinite speed of AI without sacrificing the essential wisdom of the human pilot.

References

- Endsley, M. R. (2024) 'From Automation to Autonomy: The Vigilance Decrement in AI Oversight', Journal of Cognitive Engineering and Decision Making, 18(3), pp. 204-219.

- Parasuraman, R. and Manzey, D. H. (2010) 'Complacency and Bias in Human Interaction with Automation: An Integrative Review', Human Factors, 52(3), pp. 381-410.

- European Commission (2025) The AI Act: Regulatory Frameworks for High-Risk AI Systems. Brussels: EU Publishing.

- Gartner (2025) Strategic Roadmap for Enterprise AI Governance: Moving Beyond the Loop. Stamford: Gartner Research.

- Squire, A. (2025) The Pilot vs. The Passenger: Redefining Human Agency in the Age of AI. Available at: https://andysquire.ai (Accessed: 21 November 2025).